New Version 0.4 of the Operator Toolbox Extension available.

tftemme

Administrator, Employee, RapidMiner Certified Analyst, RapidMiner Certified Expert, RMResearcher, Member Posts: 164

tftemme

Administrator, Employee, RapidMiner Certified Analyst, RapidMiner Certified Expert, RMResearcher, Member Posts: 164

New Version 0.4.0 of the Operator Toolbox Extension available.

We are happy to announce the release of a new version of the Operator Toolbox Extension. With version 0.4.0 some new enhancements wait for you:

Stem Tokens Using ExampleSet

This Operator is an enhancement to the Text Processing Extension. It can be used inside a Process Documents Operator. It replaces terms in Documents by pattern matching rules. The list of tokens to be filtered out is provided by an ExampleSet containing the replacement rules.

Here are the results on the sentence:

“sunday monday tuesday wednesday thursday friday are all days of week. Sunday and Saturday are not”. The Stem Tokens Using ExampleSet Operator replaces all words matching .*day with weekdays. The left image shows the result of the Process Documents without the Stem Tokens Using ExampleSet Operator, the right one with the Operator.

The new Operator is like the Stem (Dictionary) Operator, but uses an ExampleSet instead of a file.

Weight of Evidence

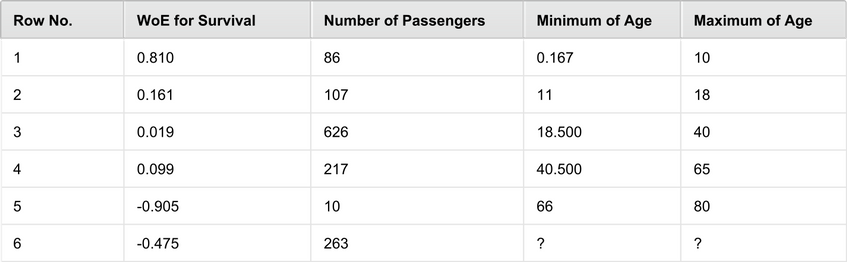

This Operator introduces a new method for discretization. The generated value expresses the chance of a binominal attribute (called base of distribution) having positive or negative value in the discretized group. This value will be the same for all the Examples that belong to the same group. Unlike other discretization Operators, this one assigns numeric values to each class.

If the Weight of Evidence value is positive, the Examples from that group are more likely to have the positive value for the base of distribution Attribute than the whole crowd. The higher the Weight of Evidence value, the greater the chance for positive base of distribution value.

The attached tutorial process helps understand the beneficial aspects of using this Operator as a substitute for other discretization methods. The following image shows the result of the tutorial process in which the Weight of Evidence Operator is applied on the Titanic data sample.

Split Document into Collection

This Operator splits a Document into a Collection of Documents according to the split string parameter.

This is, for example, very helpful if you have read in a complete Text File (with the Read Document Operator) and wants to split it into different lines to process the file line by line.

Check out the tutorial process of the Operator to get an impression how it works.

Dictionary Based Sentiment & Apply Dictionary Based Sentiment

In some cases, you want to build a sentiment model based on a given list of weights. The weight represents the negativity/positivity of a word. This dictionary should have a structure like this:

Word Weight

Abnormal -1

Aborted -0.4

Absurd -1

Agile 1

Affordable 1

The new Dictionary Based Sentiment Operator can handle such an input and creates a model out of it. We use the word list provided at https://www.cs.uic.edu/~liub/ which has two separate files for positive and negative words. After a quick pre-processing, we can build the dictionary based model.

The created model, shown below, can be used with the Apply Dictionary Based Sentiment Operator. The input for this is a collection of tokenized documents. This gives you the freedom to use all text mining operators to prepare your documents. A typical workflow would be to create a Collection of Documents (e.g. via Read Documents and Loop Files) in conjunction with a Loop Collection. In the Loop Collection, you can use all different Operators of the text processing extension.

The result of the Apply Dictionary Based Sentiment Operator is an ExampleSet with:

- The text

- The Score – e.g. the sum of weights for this document

- The Positivity – e.g. the sum of positive weights for this document

- The Negativity – e.g. the sum of negative weights for this document

- The Uncovered Tokens – e.g. the tokens which were in the document but not in the model

The Process shown in the images is attached to this post. Feel free to check it out.

Performance (AUPCR)

The Performance (AUPRC) Operator enables you to evaluate a binominal classification problem with a new performance measure.

AUPRC stands for Area under Precision Recall Curve and is tightly connected to AUC. AUC measures the curve under the - False Positive Rate - True Positive Rate curve. AUPRC is very similar, but replaces False Positive Rate with precision.

It’s beneficial, because precision might be a more interpretable measure compared to FPR. Precision is on the other hand a measure which depends strongly on the class balance. It is often useful to use AUPRC if you do know the class balance in applications.

Papers to Read:

- Ozenne et. Al., The precision–recall curve overcame the optimism of the receiver operating characteristic curve in rare diseases http://www.sciencedirect.com/science/article/pii/S0895435615001067

- Kamath et. Al., Effective Automated Feature Construction and Selection for Classification of Biological Sequences http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0099982

Thanks to @SvenVanPoucke for his helpful contribution

Additional Changes

- Improved documentation of the Tukey Test Operator

- Added several tags for the Operators in the Extension

- The Create ExampleSet Operator now correctly uses the separator specified in the parameters of the Operator

- The Get Local Interpretation Operator now has an additional outputPort which contains the collection of all local models

- The Get Local Interpretation Operator now correctly normalize the input data. It also has now the possibility to use a locality heuristic instead of specifying the locality directly.