Operator Toolbox Version 0.9.0 Released

MartinLiebig

Administrator, Moderator, Employee, RapidMiner Certified Analyst, RapidMiner Certified Expert, University Professor Posts: 3,507

MartinLiebig

Administrator, Moderator, Employee, RapidMiner Certified Analyst, RapidMiner Certified Expert, University Professor Posts: 3,507 We are proud to release version 0.9.0 of Toolbox. The Data Science teams at RapidMiner have worked hard again to provide you another set of useful operators. This time we have several features which are triggered by community requests and one operator I always wanted to have.

New Operator: Random Forest Encoder

Random Forests are one of the most used techniques in machine learning. They are fast in computation, can be computed in parallel, can handle numerical and nominal values, and are strong in predicting.

The technique used in Random Forests is an ensemble method called bagging. Ensemble methods train many weak learners and combine them to one strong learner; in bagging, the weak learners are generated by using a boostrapped sample of the data to train one 'base learner'. The result of these base learners are then combined with a (weighted) average to get a prediction.

Example for a Random Forest. The color represents the pruity of the node. Each tree results in one score. The score of the forest is the average of the individual trees.

Example for a Random Forest. The color represents the pruity of the node. Each tree results in one score. The score of the forest is the average of the individual trees. Random Forests use a very special learner as a base learner: Random Trees. Random Trees are similar to decision trees, but only use a subset of all columns per node.

How to better combine trees?

One question coming up here is — why do we just average over all the trees? Can’t we do something more “intelligent”? The answer is of course — yes, a bit.

One way to do this is to create a new table. The new table has a label column for all rows (used as the prediction target), but also a new confidence/probability column. This enables us to 'learn another learner', taking the confidences as an input and predicting our label. You can think about this as another ensemble method — stacking.

The danger of this idea is that you add additional complexity to your model which might lead to overfitting. I strongly recommend validating these trees with care.

Random Forests as an Encoding Method

Another way to look at this method is to take all the confidences of the random trees into a new column, similar to what a PCA or t-SNE does. We feed in data and get out a new representation of the data which is better correlated with the target variable. This is what I call an 'encoder'. The beauty of this encoder is that you can feed in nominal data and get out numerical. This enables us to feed the result of this encoding into learners like neural nets and SVMs.

In case of encoding I would recommend to carefully control the depth trees to not have too many paths encoded in one attribute. On the other hand the depth controls the “level of interaction” between your columns.

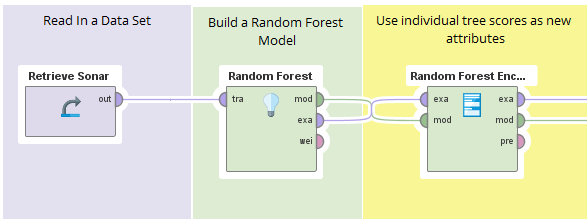

Implementation in RapidMiner

Implementation using RapidMiner. The table delivered at the most upper output of Random Forest Encoder has includes all the scores of the individual trees.

Implementation using RapidMiner. The table delivered at the most upper output of Random Forest Encoder has includes all the scores of the individual trees.

The operator takes an ExampleSet and a Random Forest model and does the transformation. It is of course also possible to quickly implement this in Python or R by iteration over the subtrees in the ensemble.

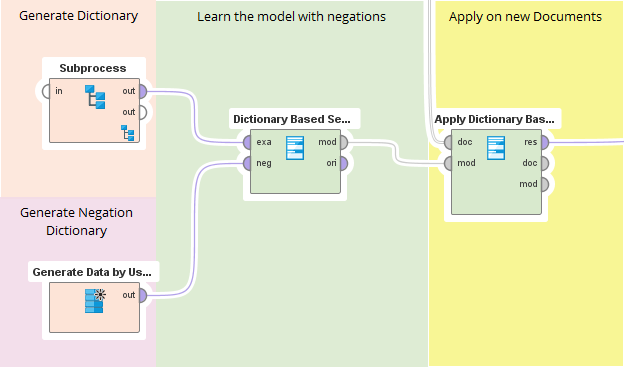

Updated Operator: Dictionary-Based Sentiment Learner

The Dictionary-Based Sentiment Learner operator was one of the operators added to Operator Toolbox in 2017. This operator takes a dictionary with words and their scores, usually between -1.0 (negative sentiment) and 1.0 (positive sentiment), sums up the positivity and negativity for the documents, and returns a score which can be used for sentiment analysis. The operator can be applied to any tokenized document.

However one common issue in sentiment analysis is the inclusion of negations. For example, if you have a sentence like:

this article is not bad

you do not want to have bad as a negative word but invert the weight of bad for the sentiment.

This can now be done with the new update of the Dictionary-Based Sentiment Learner operator by adding a 'negation dictionary'. This dictionary is simply an ExampleSet with a list of negation words. If this word occurs in a window of size x before the sentiment word, it will invert the weight. The window size can be adjusted in the operator's parameters.

**NOTE**: This feature required a bit of rework on the original model object. Models created with previous versions of Operator Toolbox are not useable anymore; you will need to recreate them.

New Operator: Read Excel Sheet Names

The usual Read Excel operator is used to read one single sheet of an Excel file. If you know the structure of the Excel file you can also loop over this operator and read various sheets of this excel file.

The Read Excel Sheet Names operator helps you to read Excel files with different sheet names. It is able to provide you a list of 'sheetid' and 'sheetnames' for any given Excel file. This can then be used in a Loop to process each individual sheet.

Updated: Group Into Collection

The Group Into Collection operator is one of the most used ones in the Operator Toolbox Extension. We enhanced it capabilities by adding an option to decide how the resulting Collection is ordered.

Sorting can of course have an impact of the execution time of the operator. So if you don't need a specific order for the collection, you can stay with the default sorting order which is 'none'.

The different sort options are:

- none: No specific order.

- alphabetical: The collection is ordered alphabetical. The values of group by attribute are compared character by character and ordered accordingly. Note that therefore the values [4,11,2,1,47] will be ordered: [1,11,2,4,47]

- numerical: The collection is ordered numerical. This is only possible if the group by attribute is numerical. The values are ordered due to the double value. The above mentioned example would be correctly ordered: [1,2,4,11,47]. The values can also be doubles which are correctly ordered.

- occurrences: The collection is ordered due to the occurrences of the values of the group by attribute in the original ExampleSet.

Updated Create ExampleSet

The operator Create ExampleSet has now an additional option to trim the attribute names. Thus you can insert whitespaces around the separator for a better readability, but you can trim them by the operator.

Also the operator now correctly parse the meta data of the to be created ExampleSet, so that you can use the metadata in following operators.

Dortmund, Germany

Comments

Very cool! Thanks!