"How to manage resources on a Hadoop cluster when we are working on it through RapidMiner Radoop"

Pavithra_Rao

Administrator, Moderator, Employee, RapidMiner Certified Analyst, RapidMiner Certified Expert, Member Posts: 123

Pavithra_Rao

Administrator, Moderator, Employee, RapidMiner Certified Analyst, RapidMiner Certified Expert, Member Posts: 123

Submitting and managing Hadoop jobs via Radoop is enabled through Radoop Advanced connection properties:

1. The queue to which a map reduce job is submitted

Advanced Hadoop Parameters:

key is "mapreduce.job.queuename"

value: default

This must match one of the queues defined in mapred-queues.xml for the system. Before specifying a queue, ensure that the system is configured with the queue, and access is allowed for submitting jobs to the queue

2.YARN resource queue, that Client uses to submit a Spark application to.

Advanced Spark Parameters:

key is "spark.yarn.queue"

value: default

3. HiveServer2

Advanced Hive Parameters Hive on Tez

Hive on Tez

key is hive.server2.tez.default.queues

Hive on MapReduce or Spark

key is mapreduce.job.queuename

value: default

hive number of sessions

key is hive.server2.tez.sessions.per.default.queue

value: default

4. For Spark:

The default setting for “Spark Resource Allocation Policy” in the Radoop connection is: “Static, Heuristic Configuration”

Recommended: “Dynamic Resource Allocation”. Then a job is started with few and small containers, then scale if required.

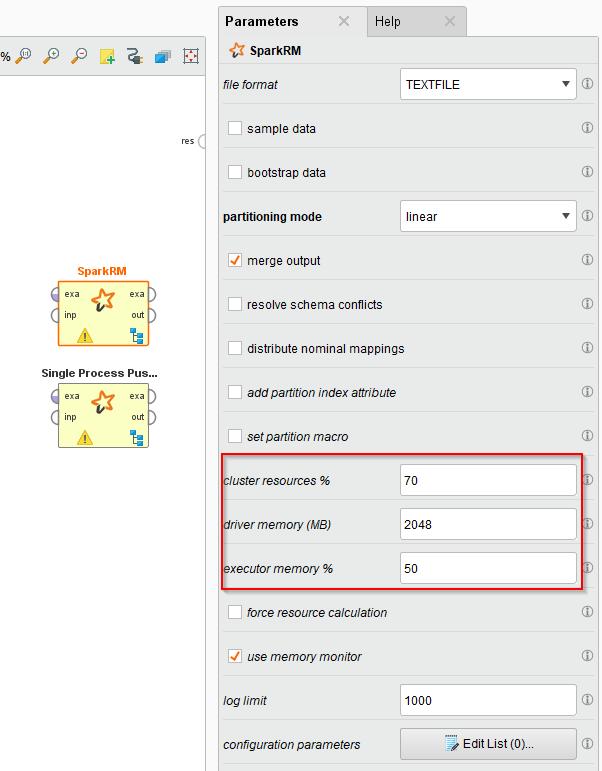

Radoop Advanced connection Properties5. SparkRM:

Radoop Advanced connection Properties5. SparkRM:

SparkRM operators have custom settings as operator parameters, that can be changed according to the subprocess’ requirement - “cluster resources %” and “executor memory %” can be tuned

SparkRM ParametersCheers,

SparkRM ParametersCheers,