Text mining

Tuba

New Altair Community Member

hello . I am pursuing a master's degree in business information management at Mersin University. I will share my problem in detail on the link you sent I use rapid miner program in my thesis. but I encountered two problems. I have 4500 Turkish theses and 1500 articles. 150 pages each thesis. 150 * 4500. each article is 20 pages. 1500 * 20. I want to classify them with rapid miner. But since my thesis count is high, I cannot make this classification with the rapidminer and it constantly gives errors. How can I solve this problem. my pc i5 processor is 5 gb.

My second problem is that I want to use the most frequently used words in my thesis, but when I do stem (snowball) in Turkish, different words come out as well as the words are not reserved for their suffixes. so I can't use the stem and I get a lot of words with the same meaning. I cannot advance my thesis briefly. can you help me

1

Answers

-

For the first problem

0 -

-

Let me briefly explain the subject of my thesis. I have articles and theses. I divided these theses and articles into three main themes. I divided each main theme into sub-themes. my goal here is to find out to what extent I made

these separations correctly. For this, I have classified with rapidminer.0

these separations correctly. For this, I have classified with rapidminer.0 -

Hey @Tuba,Thank you for sharing.I have two Ideas for solving the first problem:1. You could expand your ram using disk space see here:

https://www.youtube.com/watch?v=z_c60Osd__c2. You divide the Input data into smaller parts or make a few sub-processes for preprocessing the data.For solving the second problem it would be nice to see some of your data / results.Have you tried it without stemming?Maybe you will find some examples for turkish rapidMiner projects on the web?0

https://www.youtube.com/watch?v=z_c60Osd__c2. You divide the Input data into smaller parts or make a few sub-processes for preprocessing the data.For solving the second problem it would be nice to see some of your data / results.Have you tried it without stemming?Maybe you will find some examples for turkish rapidMiner projects on the web?0 -

thank You. I will try your suggestions for my 1st question.Sorry, I couldn't find a Turkish Stemming. When I do it with little data, I see the table. Words are given separately with each attachment. did not perceive it as a word.

0

0 -

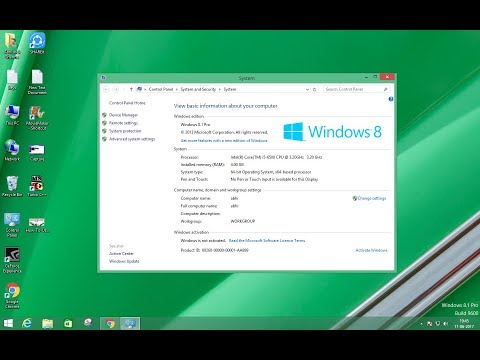

HI @Tuba on your image I can see you have over 7k attributes created I guess that makes your computer go nuts are you using any configuration for the prunning method?

One tip I can give you to share your configuration and process images try File--:Print/ExporImage then choose design and the export the image.

You can also use Loop Batches to reduce the amount of memory used while trying to process all your files.

0 -

thanks You. I know the rapidminer program as much as I watch it from the videos. do not know how to configure? 😔I will try what you say second0

-

Just split your input files up into batches and process each batch separately. Then make sure you use one of the pruning options for generating the wordlist in the Process Documents from Data operator. This will keep everything within your available memory and solve your first problem. You can then combine all the resulting wordlists at the end.

I don't know enough about Turkish and stemming to have suggestions for the second issue, but perhaps google some questions related to stemming in Turkish and you may find some helpful resources.

0 -

thank you. I divided my data into 3 main themes and I divided them into 8 sub-theme. The article can be categorized because of its low number, but it cannot classify even 8 sub-themes in the sub-theme of the thesis. still can't find a solution

0 -

@Tuba

I am not very good at text mining with RM, feeling better in Python. Stem(snowball) works properly for English words actually. It may not be the best choice for Turkish. If your essays are Turkish, you may need to use Stem(Dictionary) which requires a document of patterns in Turkish. Normally there are good dictionaries for Turkish words that can be used in R/Python. You can search for one.

In your post on 25 March, you are showing the exa port result from your process document process. If you can connect the "wor" to res port you can see TF-IDF counts.

Also, this will give you an idea about your document so you can further transform your dataset.

I would recommend you Filter Tokens(by length) operator so you can cut many words at once after you examine the words table.

I just made an example set of 5 academic papers about Neural Network in Flood Forecasting.

Is it something that can help you? You can further filter and select data for modeling.

Second, in order to classify documents, I missed the point about how you are planning to do it. Are you using meta-data like information to classify them? Or just processing the document and feeding the k_NN model? If you can tell me more about I may try to help you.

bests,

Deniz0 -

first of all thank you very much. I can explain better if we can understand TurkishI will try the model you suggested

I have divided the individual articles and theses into three main themes according to their topics. I divided the main themes into sub-themes. I applied k-nn after thatI don't know how to code. I don't know in python. I couldn't find stemming ready Turkish.I'm doing a master's degree at Mersin University and stemming and the abundance of data have forced me so much0