Method for identifying a set of Reliable Negative examples from unlabeled data

Hello,

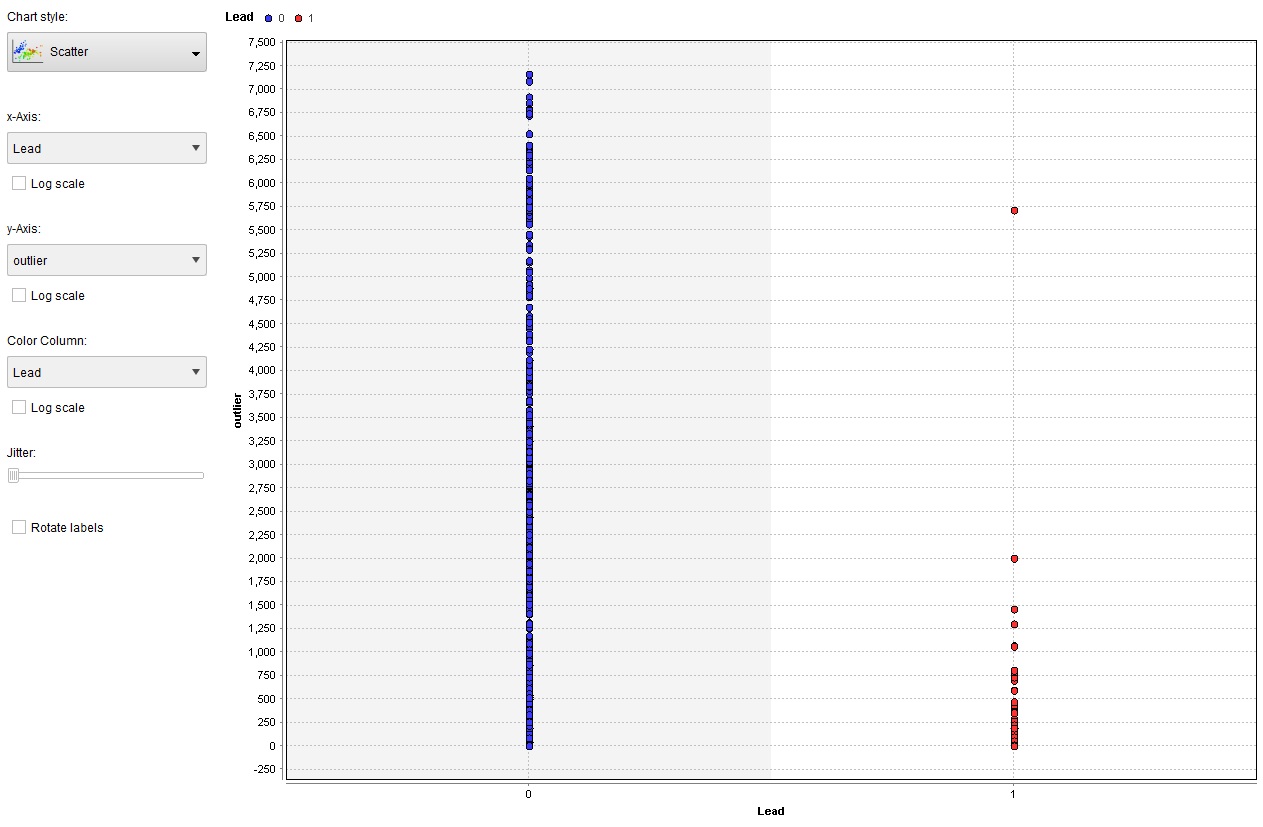

I am looking for confirmation on how I handled identifying a set of reliable negative examples from unlabeled data. I have seen numerous methods performed; however, nothing specific about the approach I took (which isn’t to say it isn’t out there, I just haven’t seen it throughout my research). Some background: the dataset that I’m working with has a set of positive examples (n = 964) and a larger set of unlabeled data (n = 8,107). I do not have a set of negative examples. I have attempted to run a one-class SVM but am not having any luck. So, I decided to try to identify a set of reliable negatives from the unlabeled data to be able to run a traditional regression model. The approach I took was to run a local outlier factor (LOF) on all of my data to see if I could isolate a set of examples that have an outlier score that is completely different than my positive examples. The chart below shows the distribution of scores between my positive class (lead = 1) and my unlabeled data (lead = 0). As can be seen, the positive examples (minus the 1 example with an outlier score near 5,750) are all clustered at or below an outlier score of 2,000.

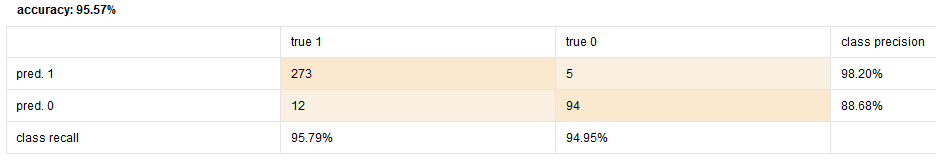

From here, I selected all of the examples from this output where lead = 0 (to ensure I’m only grabbing unlabeled data) and outlier score ≥ 2001 (the highest lead outlier score is 2,000.8) to use as my set of reliable negative examples (n = 315). I then created a dataset of all of my positive examples plus the set of reliable negatives (n = 1,279) and fed this into a logistic regression model. I am getting really great performance out of both the training and testing sets, which is making me question my methods. Here is the training performance table:

- Training AUC: 0.968 +/- 0.019 (mikro: 0.968)

Here is the testing performance table:

- Testing AUC: 0.973

My questions are:

- Does the logic behind how I identified a set of reliable negatives makes sense? Are there any obvious (or not so obvious) flaws to my thinking?

- By constructing my final dataset in this way, am I making it too easy for the model to correctly classify examples?

Thank you for any help and guidance.

Kelly M.

Maven

Maven

Answers

Dear Kelly,

this is a very interesting post. I agree in most of the things you said and to be honest i would have followed a very similar line of thought.

There is on thing which is bothering me here a bit: Did you built yourself a self-fullfilling prophecy? In the end the logistic regression is tasked to find the rules generated by LOF. So the LogReg is generating a approximation of the very same thing. It does not necessary mean that you find the underlying pattern of your "strange" behaviour. What you do get is a interpretable and deployable form of the LOF outlier detection model which is fairly nice. But what ever you plan to do with it, you always need to know where it comes from.

Best,

Martin

Dortmund, Germany

Hi @KellyM ,

Without knowing more backgroud of your user case, I can not answer your first question. Let me share some ¢

a. if you run local outlier factor (LOF) on the unlabeled data, and use the high outlier-scored examples as negative set in your predictive model. Do you think it would be a good representative set for all "negative" data?

In your model, almost all the postive set have LOF outlier score <2001, and the negative sets used in model all have outlier score >2001, so of course the model will do a greate job (to differentiate high outiler-scored from low). It is because selection bias is introduced for data-prep in the very beginning, and that help the model "cheat".

b. Are you running machine learning to predict qualified leads? Do you know the overall proportion of postive leads in the whole population?

Usually for marketing leads qualification, the percentage of postive leads is no more than 5-15%, which is really a small portion. To leverage supervised learning models with postive and negative examples, you can start building quick and dirty under-sampled (~1000 examples) from the unlabeled data and use it as negative examples. You may later identify some unlabeled data that was considered to be negative but later predicted as postive leads by your model. It definitely will happen! It is not the model's fault. With some business domain knowledge, you can improve data labels and reinforce the model accuracy/recall rate.

Last but not least, we have some intersting links for inspiration. We eat our own foods.

Let me know if you have any further questions.

I echo a lot of what my colleagues @yyhuang and @mschmitz say. I think the problem is truly understanding what the "negative" examples are and you're trying to find a handy way of autolabeling those.

I think the LOF method is a great start to exploring what the potential negative labels could be but then saying LOF > 2000 is negative and then training a LogReg on it just games the system so to say. The LogReg learns really well from the "hint" you gave it from LOF. You were right to question your results, no matter how awesome they were.

I don't know enough about the data you're working with you but what is consider a "negative label?" Was it a fraud event, intrusion, someone churning from your company etc? I would think you need to start with a few examples like this and probably boostrap your way to a training set.

This is quite an interesting problem, would love to banter more about it and see if we can come to a conclusion.

Hello @mschmitz, @yyhuang and @Thomas_Ott:

Thank you all for your help. Let me provide more background information on the dataset and other steps I took prior to running the LogReg.

I am looking to create a predictive model for identifying counterfeit vehicle identification numbers (VINs), which represents my “lead” variable. A counterfeit VIN is a fake, made up VIN that was not produced by the vehicle manufacturer. @Thomas_Ott, the data that I am trying to identify is a fraud event. Every month, I get thousands of more VIN records that I have to iterate through to identify leads. The positive class represents less than 1% of the overall data. The goal of my project is to create a predictive model such that a smaller, more concentrated list of VINs can be iterated through. I agree with you @Thomas_Ott that the biggest issue revolves around identifying negative examples. I have ways of excluding VINs from consideration as a lead just by looking at certain characteristics; however, I excluded all VINs that wouldn’t be a viable lead from my dataset prior to importing into RapidMiner. So, I am left with a list of VINs that, on the surface, could be a lead.

As previously mentioned, I ran LOF on all of my examples to identify the set of reliable negatives. After that, I went through some data preprocessing before feeding the final dataset into the LogReg model. Below is a screenshot of my workflow and a description of each step.

Step 1/ETL – Retrieves the original dataset, sets label role, and removes irrelevant attributes.

Step 2/Multiply – Multiplies the dataset into two streams.

Step 3/Leads – Selects only the records that make up the positive class from the original dataset (Lead = 1).

Step 4/LOF_ExampleSet – Retrieves the ExampleSet output from the LOF model.

Step 5/Filter Examples – Applies the same filters mentioned in the original post to select only the set of reliable negatives (Lead = 0 and Outlier ≥ 2001).

Step 6/Join – When I ran the LOF, I normalized the data first. This step inner joins the reliable negatives to the original dataset to pull in the original attribute values for each example.

Step 7/Reliable Negatives – This step filters out the Outlier score from the dataset so that it isn’t fed into the LogReg model as an attribute.

Step 8/Union – This unions my positive class and reliable negatives into the final dataset of 1,279 examples, which was then used to drive the LogReg model. I then stored this dataset as a new data object (step not shown) which I used in the LogReg model.

I may be wrong in my thinking here but since I’m only using my original data and not including the outlier score as an attribute, I thought that the model would be unbiased by it. I am not including the LOF in the LogReg workflow at all actually. I completed that step in a separate process, completed the data preprocessing in another workflow, and then ran the LogReg in its own workflow.

As an aside, I am noticing that the confidence levels provided for my predictions are mostly 1s and 0s, with about 14% deviating from that. Is this indicative of an over-fit model (or some other possible short-coming)?

@mschmitz, thank you for your interest! It has been quite fun trying to figure out a solution to the problem that I’m having and I’m glad to have confirmation that this line of thinking makes sense. Given the additional information that I provided, do you still think that my LogReg is finding the rules generated by LOF?

@yyhuang, in regard to your question about these being a good representation of all “negative” data, I do not have any examples of negative data to compare to. Unfortunately, we have not kept track of potential leads that ended up not being leads, so I do not have a way of knowing if this is a good representation of the negative class. As such, I thought using an anomaly detection method would be best for my purposes. I look forward to watching the webinar on identifying the most qualified leads to see if there is another method that I can try. Thank you for the additional resources!

@Thomas_Ott, I would love to discuss this problem with you in more detail. I’m new to the forum; do you have a way of contacting me through a private message of some sort?

Thank you all again for your assistance. This has been truly helpful.

KellyM

Sure we can take this offline, you can Private Message through the forum here.

I completely get that this is a highly imbalanced problem but how do you know that a VIN is fake? If you looked at a list of VIN's, can you spot which one is fake? How have the police or end users such as yourself reconciled the fakes? is there a database to check the VIN's against?