Evolutionary optimization: type of nested learner

kypexin

Moderator, RapidMiner Certified Analyst, Member Posts: 291

kypexin

Moderator, RapidMiner Certified Analyst, Member Posts: 291  Unicorn

Unicorn

Hi guys,

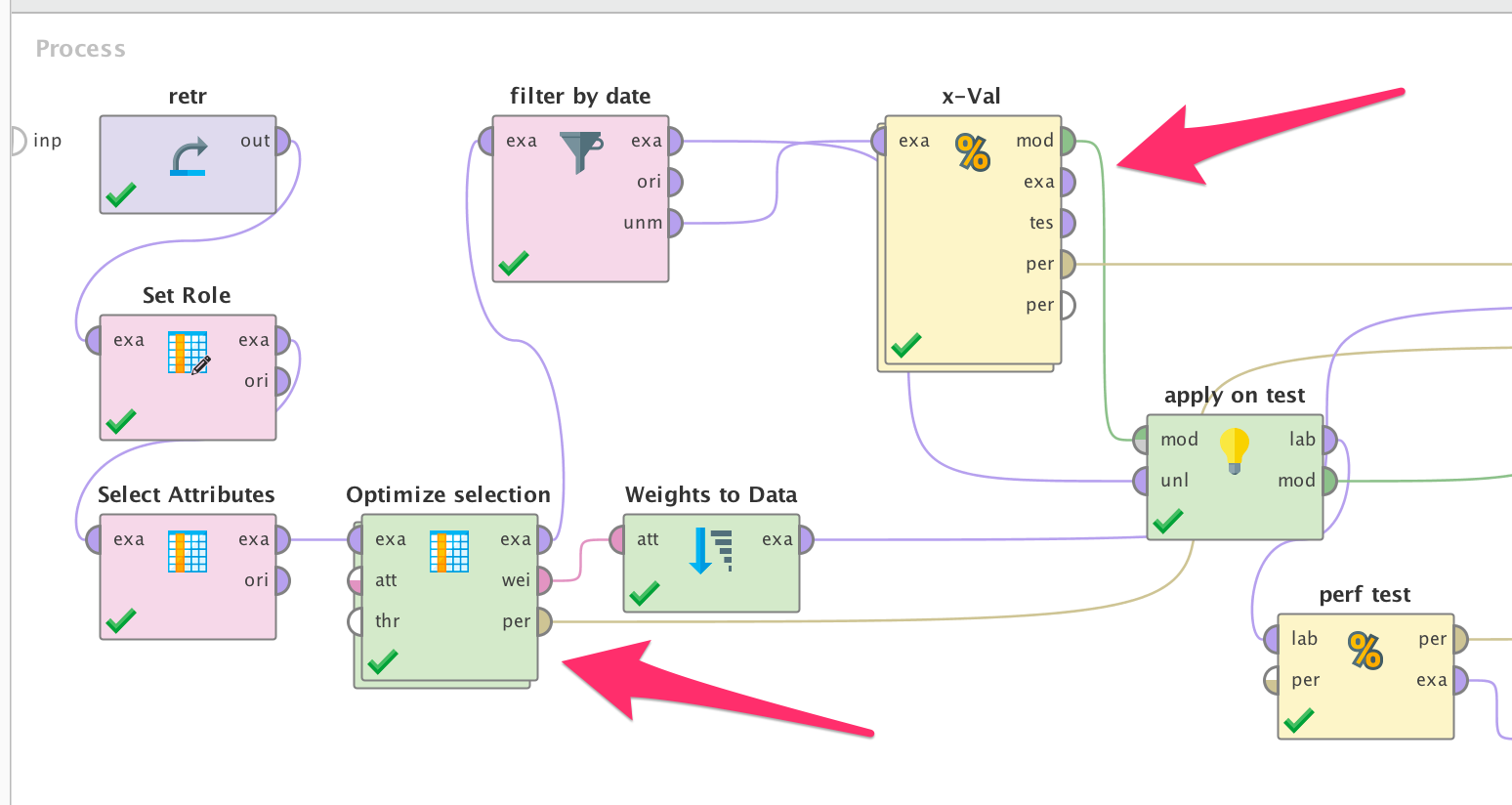

I have a process where I am performing evolutionary selection optimization and validate the model in normal way using x-validation and then apply it to test data. The model I am training is GBT with certain parameters, so it sits inside x-Val.

In fact, "Optimize selection (Evolutionary)" is a nested operator too, which contains another validation process with another learner inside. Initially I used the same GBT learner inside optimization.

My question is: do I necessarily have to use the identrical learners with the same parameters in both nested operators marked with arrows? I have experimented a bit and got slightly different results, though the difference is pretty subtle. For example, if I use random forest inside optimization, it selects slightly different set of parameters, which affects final performance, but I wouldn't say it is decreased dramatically.

So I would like to have an advice more like of a 'best practice': is it essential to have identical learners in that case or this does not matter much at the end? Or, in other words, does type of learner used inside evolutionary optimization matter, and how much?

Answers

Hey @kypexin,

well - there is no reason which prevents you from trying this. Usually you would need to validate the Optimization as well (!). For the rest: As long as your performance is fine you are good to go.

~Martin

Dortmund, Germany

Dear all,

Interesting topic !

Unfortunately, it's not to bring answer elements, but to ask a complementary question :

As a best practice, the feature selection, so the Optimize Selection operator have to be implemented inside the training part of Cross Validation operator ? or it does not matter ?

Thanks you,

Regards,

Lionel

Hey @mschmitz

You mean the learner inside Optimization? If so, it is being validated in my case, as the nested chain is actually Optimization -> Validation -> GBT. Otherwise, clear. Thanks!

Vladimir

http://whatthefraud.wtf

Hi @kypexin,

the whole process of feature selection would need to be validated. FOr example it can be part of your X-Val on the right hand side.

~Martin

Edit: @lionelderkrikor, yep. That's one way to do it.

Dortmund, Germany

Hi @mschmitz,

OK, thanks you for your answer.

Regards,

Lionel

I agree that the learners do not necessarily need to be the same in the different steps. For example, it is not uncommon in my view to use one learner for feature selection, another for feature engineering, while a different learner for final model development. As long as each is appropriately cross-validated, there should be no major problems with doing this.

Lindon Ventures

Data Science Consulting from Certified RapidMiner Experts

As Martin said, the correct way is to use feature selection as a part of the training subprocess. Just to clarify I would like to explain what would happen in the process of the picture:

1. Feature Selection will be done using the whole dataset. We then select features that we know perform well when applying a model to said dataset.

2. We do cross validation to test a model with these features. The model would get a better performance in comparison to testing the model with new data (coming from the same process). This is specially true if the model for feature selection and final model are the same. If we think about it, we are actually repeating a cross validation already done inside the Optimize Selection operator (folds could be different though).

So in the end we get a more biased estimation of the model performance.

I read somewhere that Naive Bayes is a good model for feature selection, as it doesn't have to be optimized, is fast and captures the correlations between the predicted variable and the features. But depending on the size of the dataset, you may have to settle for a single cross validation.

Regards,

Sebastian